It's almost 2026, so I thought, let's give an update, since quite a few changes happened since the start of the year.

From bare-metal to Proxmox VE

I was always interested in having a flexible infrastructure, with no fixed resource assignments. That was one of the problems I had in the "first iteration" of my Homelab back in 2019 when I was still running ESXi 6.7. But with fixed resources and changing demand, I quickly got rid of ESXi and switched over to a bare-metal Ubuntu install. That was the way it ran until June 2025. During my apprenticeship, I learned quite a bit about best practices and how to run IT infrastructure in a more professional way. With that also followed the desire and self-criticism to improve my Homelab. One of the first things was adapting my backup strategy, which I described in detail in my second blog post "Building a backup NAS...". It ran quite well, with three servers: one dedicated backup server running TrueNAS, one main server where everything important ran, and one for the Gameserver.

But I always wanted to have more flexibility, so I started looking into Proxmox VE as a hypervisor. After getting in touch with Proxmox VE out of pure interest during my apprenticeship and vocational college, I decided to give it a try in my Homelab as well. I started by doing a P2V migration of my Gameserver, installed Proxmox VE on the Gameserver hardware, and migrated the VM over. I split up the Gameserver into different LXCs. After some time running it like this, I decided to migrate my main server as well. So I did the same thing, migrating service after service into separate LXCs and VMs. After all services were migrated, I installed Proxmox VE on my main server hardware as well and created a cluster.

Since it offered a nice opportunity to let my accumulated knowledge about networking flow into my Homelab, I also reworked my network. I went from a simple flat network with only a handful of VLANs to a more complex setup with multiple VLANs, inter-VLAN routing, firewalling, and QoS. Now the servers for my internal services (Home Assistant, Pi-hole, etc.) are on a separate VLAN from my Gameservers, which are again separated from my personal devices (PCs, phones, etc.) VLAN. This way, I can better manage traffic and security between the different segments of my network.

New Hardware

With the goal of having a Proxmox cluster, I also needed a homogeneous hardware setup. Both Proxmox nodes were upgraded to have the same CPU (AMD Ryzen 5 5600X), RAM (32GB DDR4), and storage. Hardware-wise, both servers are nearly identical now, only differing in the mainboard, case, and the main server still having its GPU, which got upgraded to an Arc A380 for transcoding.

A big problem was the storage. I always used ZFS, but both servers had different storage setups, so they needed to be reworked as well to have the same configuration. Flash prices are high, so going all-flash isn't an option for now. I decided to do a hybrid setup. ZFS supports the use of SLOG and L2ARC cache, but the SLOG doesn't cache the written data itself, only the transaction log, and the L2ARC only caches read data. I searched for a way to have a write cache as well. After some research, I found bcache, which offers write caching.

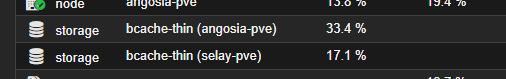

During testing bcache, I tried multiple ways to use it in Proxmox. Using bcache with ZFS felt hacky and unreliable, so I decided to ditch ZFS and go with LVM and bcache. I still needed reliability and data integrity, so both servers got two 1.8TB SAS HDDs in RAID1 as the bcache backing device, with two 256GB SATA SSDs as the bcache cache device. After some tuning, the performance wasn't bad at all. I added the bcache to Proxmox as an LVM-Thin pool and created all VMs and LXCs on that.

For those interested, here's a small guide to replicate the bcache setup in Proxmox VE:

First, create the RAID1 arrays for the backing devices:

$ mdadm --create /dev/md0 --level=1 --raid-devices=2 /dev/sdb /dev/sdc --force --name "SSDs" --verbose

$ mdadm --create /dev/md1 --level=1 --raid-devices=2 /dev/sdd /dev/sde --force --name "HDDs" --verbose

Create the bcache devices, first the cache devices, then the backing devices:

$ make-bcache -C /dev/md0 -B /dev/md1 Now you should have a new bcache device (/dev/bcache0). Attach the cache to the backing device:

$ blkid -s UUID -o value /dev/md0 # get the UUID of the cache device

$ echo <UUID> > /sys/block/bcache0/bcache/attach

Now create the LVM pool and the LVM thin pool:

$ pvcreate /dev/bcache0

$ vgcreate bcache-vg /dev/bcache0

$ lvcreate -l 100%FREE -T bcache-vg/bcache-thinpool

Now you can add the LVM thin pool to Proxmox VE. I did this by editing the storage.cfg so it is applied to both nodes directly:

lvmthin: bcache-thin

thinpool bcache-thinpool

vgname bcache-vg

content images,rootdir

nodes selay-pve,angosia-pve

After that, you can create VMs and LXCs on the bcache-thin storage.

What's next

This post already got longer than initially planned, and there are still quite a few topics I want to go into more detail about.Especially the networking setup, Proxmox cluster specifics, and some of the decisions around monitoring, backups, and GPU passthrough deserve their own posts.

I'll cover those in follow-up posts, where I can properly go into the details, lessons learned, and what I would do differently if I had to start over.